What Is LDA Topic Modeling?

A concise description of how LDA topic modeling works.

LDA topic modeling is topic modeling that uses a Latent Dirichlet Allocation (LDA) approach.

Topic modeling is a form of unsupervised learning. It can be used for exploring unstructured text data by inferring the relationships that exist between the words in a set of documents.

LDA is a popular topic modeling algorithm. It was developed in 2003 by researchers David Blei, Andrew Ng and Michael Jordan. It has grown in popularity due to its effectiveness and ease-of-use, and can easily be deployed in coding languages such as Java and Python.

How LDA topic modeling works

LDA discovers hidden, or latent, topics in a set of documents and uses Dirichlet distributions in its algorithm.

A Dirichlet distribution can be thought of as a distribution over distributions.

In LDA topic modeling, there are two Dirichlet distributions used. The first is a distribution over topics and the second is a distribution over words.

When LDA is applied to a set of documents, it produces a mix of topics for each document and a mix of words for each topic.

It arrives at these mixes by calculating the relative frequency counts between all words in all documents and finding which groups of words fit together. Each resulting group of words forms a topic.

In addition to the frequency counts, the two Dirichlet distributions are used to provide variability in the way that topics are inferred. This variability is important because it helps to generalize the formation of topics, resulting in more realistic topic and word mixes.

One requirement of LDA is that the number of topics needs to be decided in advance. Based on this number—let’s call it K—the algorithm will generate K topics that best fit the data.

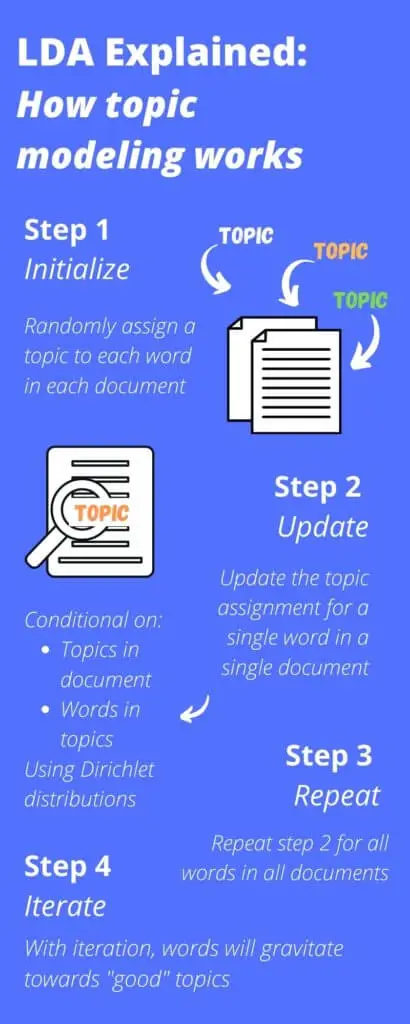

LDA step by step

LDA topic modeling works through an iterative 4-step process:

Step 1–Initialization. Randomly assign topics to each word in each document, resulting in frequency counts for topics in each document and words in each topic.

Step 2–Update. For a single word in a single document, update its topic assignment based on the frequency counts across all words and topics and the additional variability from the Dirichlet distributions.

Step 3–Repeat. Update the topic assignment (ie. repeat step 2) for all words in all documents.

Step 4–Iterate. Go back to step 1 and repeat the whole process.

Why is the LDA topic modeling algorithm iterative?

With each iteration of the LDA algorithm, better and better topics are formed.

The algorithm shows that topic assignments depend on both the topics in a given document and the words in a given topic. Hence, the distributions of both topics and words matter when assigning topics.

With each iteration, the relative counts amongst words and topics, as well as the Dirichlet variability, are used to calculate probabilities for words belonging in topics and for topics belonging in documents.

These probabilities are updated with each iteration. With each update the words in topics, and the topics in documents, move together in a way that produces more probable groupings based on the calculations.

Hence, with more iterations, words gravitate towards each other to form topic and word mixes that are more and more likely. The resulting topics represent the most likely combinations given the number of iterations, the underlying set of documents and the workings of the LDA algorithm.

In practice, you can choose the number of iterations that you want the algorithm to run, and the more iterations the better the results. You’ll need to balance this, however, against the computational cost of more iterations.

The topics produced with LDA tend to make good sense for human interpretation. This is one of the reasons why LDA topic modeling is a popular approach for analyzing unstructured text data.

Curious? To learn more about LDA topic modeling:

- Here’s a comprehensive yet intuitive explanation of how LDA topic modeling works—step by step and with no math

- Here’s an explanation of how to evaluate topic models—an important and sometimes overlooked aspect of topic modeling

- Here’s a hands-on example of how LDA works in practice (with Python code) which uses topic modeling to analyze US corporate earnings call transcripts

In summary

- LDA topic modeling is topic modeling that uses a Latent Dirichlet Allocation (LDA) approach

- LDA is a popular topic modeling algorithm that works by discovering the hidden (latent) topics in a set of documents with the help of Dirichlet distributions

- LDA topic modeling produces a mix of topics for each document and a mix of words for each topic—these represent the most likely combinations

- LDA uses a 4-step iterative process, which produces better results as the number of iterations increases based on the way that probabilities are updated with successive iterations in the LDA algorithm.