Why the Big Future of Machine Learning Is Tiny

TinyML is an emerging AI technology that promises a big future—its versatility, cost-effectiveness, and tiny form-factor make it a compelling choice for a range of applications.

Contents

- What is tinyML?

- The challenges of tinyML

- How tinyML works

- A tiny approach reaps big benefits

- Applications of tinyML

- The future of tinyML

- In summary

In parts of Asia, property damage, crop-raiding, injury, deaths and retaliatory killings are on the rise.

Why? Due to increasing levels of conflict between humans and elephants—human-elephant conflict (HEC)—in densely populated areas.

According to WILDLABS, 400 people and 100 elephants are killed each year in India alone due to HEC.

One way of reducing HEC is to establish early warning systems for communities living near elephant habitats. But it’s not easy—these habitats are typically hard to access, have limited wireless connectivity and have no electricity.

An emerging artificial intelligence (AI) technology, however, is helping to find solutions—tinyML.

What is tinyML?

TinyML, or tiny machine learning, works by deploying tiny machines into areas where they are needed.

TinyML is characterized by:

- small and inexpensive machines (microcontrollers)

- ultra-low power consumption

- small memory capacities

- low latency (almost immediate) analysis

- embedded machine learning algorithms

Let’s look at these a bit closer.

The tiny machines used in tinyML are microcontrollers—compact integrated circuits designed for specific tasks—that work smartly due to embedded machine learning, run on very low power, provide immediate results and are inexpensive. They are proving invaluable in a range of applications like HEC early warning systems.

Microcontrollers are in themselves not new—there are an estimated 250 billion microcontrollers in use today—and they’re integral to the growing footprint of the internet-of-things (IoT).

But the microcontrollers used in tinyML are extremely small, sometimes in the order of a few millimeters, and consume ultra-low power, in the order of a few milliwatts. They also have very small memory capacities, typically less than a few hundred kilobytes.

The machine learning and deep learning algorithms that make tinyML microcontrollers “smart” are often referred to as embedded machine learning or embedded deep learning systems. This is because the microcontrollers on which the algorithms run are considered to be embedded into the specific-purpose systems that they belong to.

And since the majority of analysis in tinyML systems are conducted directly on the microcontrollers—in-the-field or at-the-edge—the results are immediate. This can be very useful in situations where low latency is important—in fast-moving warning systems, for instance—where potential delays in accessing cloud servers are problematic.

The challenges of tinyML

In a world where machine learning algorithms are becoming larger and more resource-intensive, how does tinyML fit in?

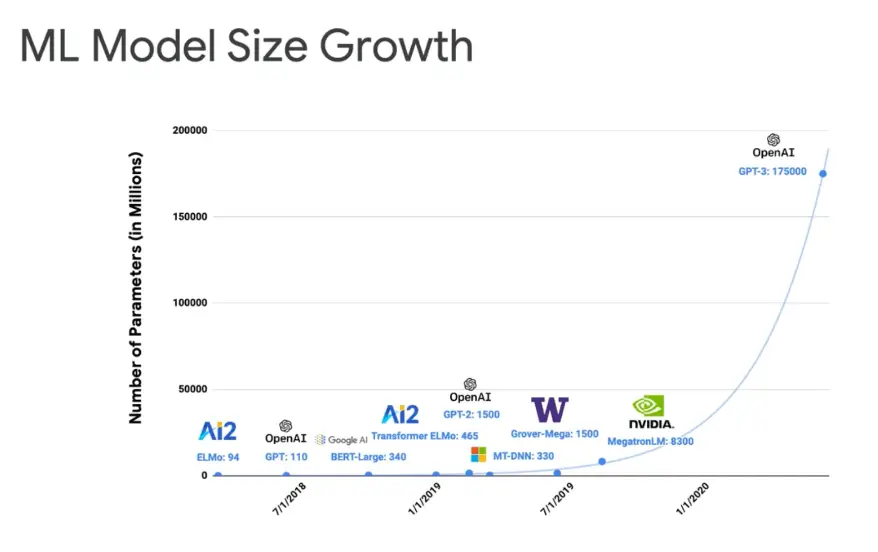

To put things in context, consider the chart below.

In recent years, machine learning models have grown exponentially—from less than 100 million parameters to 175 billion parameters within the three years to 2020!

What’s more, they’re using more energy than ever before—according to one estimate, the carbon footprint of a single training of BERT (a recently developed natural language processing model) is enough to fly roundtrip between New York and San Francisco.

Training and deploying modern machine learning algorithms clearly has significant resource demands. Hence, most modern machine learning algorithms are deployed on relatively powerful devices and typically have access to the cloud, where the bulk of resource-intensive calculations take place.

But bigger is not always better, and tinyML has an important role to play at the smaller end of things.

How tinyML works

In order to work within the significant resource constraints imposed by tinyML, embedded tinyML algorithms adopt the following strategies:

- Only inference of a pre-trained model is deployed (which is less resource-intensive) rather than model training (which is more resource-intensive)—note that future efforts will try to incorporate a degree of training into tinyML systems as the technology develops

- The neural networks that underlie tinyML models are pruned by removing some of the synapses (connections) and neurons (nodes)

- Quantization is employed to reduce the memory required to store numerical values, for instance by translating floating-point numbers (4 bytes each) to 8-bit integers (1 byte each)

- Knowledge distillation is used to help identify and retain only the most important features of a model

Some of these strategies can result in reduced model accuracy.

Pruning and quantizing neural networks, for instance, reduces the granularity with which a network can capture relationships and infer results. Hence, there’s a necessary trade-off between model size and accuracy in tinyML, and the way in which these strategies are implemented is an important part of tinyML system design.

A tiny approach reaps big benefits

Despite its constraints, tinyML is helping to find solutions in situations where conventional AI technology doesn’t work.

In the HEC situation described earlier, for instance, tinyML is ideal given its low cost, low power consumption (a single coin cell battery can power a microcontroller for years) and low data transfer requirements. The embedded ML gives each microcontroller enough intelligence to identify elephant threats in the field, without the need for frequent data transfer to and from a cloud server for making decisions. This translates to considerable power savings and greatly reduces reliance on bandwidth and connectivity.

More generally, the key features of tinyML—low cost, latency, power, and data transfer requirements—extend to a variety of use-cases where these features are important given real-world, practical constraints.

Applications of tinyML

Let’s look at a few examples of where tinyML is making a big difference:

1. Industrial predictive maintenance

Modern industrial systems need constant monitoring, but in many situations, this isn’t easy with conventional systems.

Consider remotely-located wind turbines, for instance. These are hard to access and have limited connectivity.

TinyML can help by deploying smart microcontrollers that have low bandwidth and connectivity needs. This is exactly what Ping, an Australian startup, has introduced in its wind turbine monitoring service.

Ping’s tinyML microcontrollers (a part of their IoT framework) continuously and autonomously monitor turbine performance using embedded algorithms. The cloud is accessed only for summary data and only when necessary.

This has improved Ping’s ability to alert turbine operators of potential issues before they become serious.

2. Agriculture

In Africa, the cassava crop is a vital food source for hundreds of millions of people each year. Yet, it’s under constant attack from a variety of diseases.

To help combat this, PlantVillage, an open-source project run by Penn State University, has developed an AI-driven app called Nuru that can work on mobile phones without internet access—an important consideration for remote, African farmers.

The Nuru app has been successful in mitigating threats to the cassava crop by analyzing sensory data in the field.

As the next step in Nuru’s development, PlantVillage plans to use tinyML more extensively, deploying microcontroller sensors across remote farms to provide better tracking data for analysis.

TinyML is also finding novel uses in the production chain of agricultural goods, like coffee beans. As an example, two Norwegian firms—Roest and Soundsensing—have developed a way of automatically identifying the ‘first crack’ during the coffee bean roasting process.

Identifying the first crack is vital—the time spent roasting after the first crack significantly influences the quality and taste of the processed beans. The firms have included a tinyML-powered microcontroller in their bean-roasting machines to do the job, and this has enhanced the efficiency, accuracy, and scalability of the coffee roasting process.

3. Healthcare

Hackaday, an open-source hardware collaborative, has been combating mosquito-borne diseases such as Malaria, Dengue fever, and the Zika virus with its Solar Scare Mosquito system.

The system works by agitating stagnant water in tanks and swamps, denying the opportunity for mosquito larvae to grow (the larvae require stagnant water to survive).

The water is agitated using small robotic platforms that only operate when necessary (saving energy), using analysis of rain and acoustic sensory data. Summary statistics and alerts (to warn of mosquito mass-breeding events) are sent using low-power and low-bandwidth protocols.

4. Conservation

In addition to the HEC example described earlier, a couple of other tinyML applications in conservation efforts include:

- Researchers from the Polytechnic University of Catalonia have been using tinyML to help reduce fatal collisions with elephants on the Siliguri-Jalapaiguri railway line in India. There have been over 200 such collisions over the past 10 years. The researchers designed a solar-powered acoustic and thermal sensor system with embedded machine learning to operate as an early warning system.

- TinyML is being used in the waters around Seattle and Vancouver to help reduce the risk of whale strikes in busy shipping lanes. Arrays of sensors with embedded machine learning perform continuous real-time monitoring and provide warning alerts to ships when whales are in their vicinity.

The future of tinyML (is bright)

TinyML is bringing enhanced capabilities to already established edge-computing (computing in-the-field) and IoT systems.

Importantly, it’s doing this in a manner—with low cost, low latency, small power, and minimal connectivity requirements—that’s making tinyML systems easy to deploy across a wide range of applications.

That’s why some refer to the expansion of tinyML as a move towards “ubiquitous edge computing“.

As described in this article, tinyML is providing important solutions to a range of real-world problems that conventional systems cannot tackle.

So, while conventional machine learning continues to evolve towards more sophisticated and resource-intensive systems, tinyML will fill a growing need at the other—smaller—end of the spectrum.

And with the increasing footprint of IoT, where microcontroller device counts are already in the hundreds of billions, tinyML has a big role to play.

This is why Harvard associate professor Vijay Janapa Reddi, a leading researcher and practitioner in tinyML, describes the future of machine learning as “tiny and bright“.

Reddi believes tinyML will become deeply ingrained into everyday life in the years ahead and is, therefore, a real opportunity for anyone who wants to learn about it and get involved.

Also enthusiastic is Pete Warden, Google research engineer and technical lead of TensorFlow Lite, a software framework for implementing tinyML models.

Warden believes that tinyML will impact almost every industry in the future—retail, healthcare, agriculture, fitness, and manufacturing to name a few.

In summary

- TinyML is an emerging area of machine learning which features low cost, latency, power, memory and connectivity requirements, and is adding value in a range of applications

- Given its resource constraints and in-the-field deployment, tinyML systems are typically used for inference of pre-trained machine learning models

- Machine learning algorithms are embedded into tinyML systems, and they adapt to the resource constraints through pruning, quantization and knowledge distillation of conventional algorithms

- TinyML applications range from industrial predictive maintenance, agriculture, healthcare and conservation efforts, with many more potential applications possible in future

- The future of tinyML appears bright, and the versatility and cost-effectiveness of tinyML systems suggest that machine learning’s biggest footprint may be through tinyML in the years to come